Everyone is massively underestimating what’s going on with neural networks. The real significance is abstract. you need to stitch together a bunch of high-level STEM concepts to even see the full picture.

Right now, the applications are basic. It’s just surface-level corporate automation. Profitable, sure, but boring and intellectually uninspired. It’s being led by corpo teams playing with a black box, copying each other, throwing shit at the wall to see what sticks, overtraining their models into one trick pony agenic utility assistants instead of exploring other paths for potential. They aren’t bringing the right minds together to actually crack open the core question. what the hell is this thing? What happened that turned my 10 year old GPU into a conversational assistant? How is it actually coherent and sometimes useful?

The big thing people miss is what’s actually happening inside the machine. Or rather, how the inside of the machine encodes and interacts with the structure of informational paths within a phase space on the abstraction layer of reality.

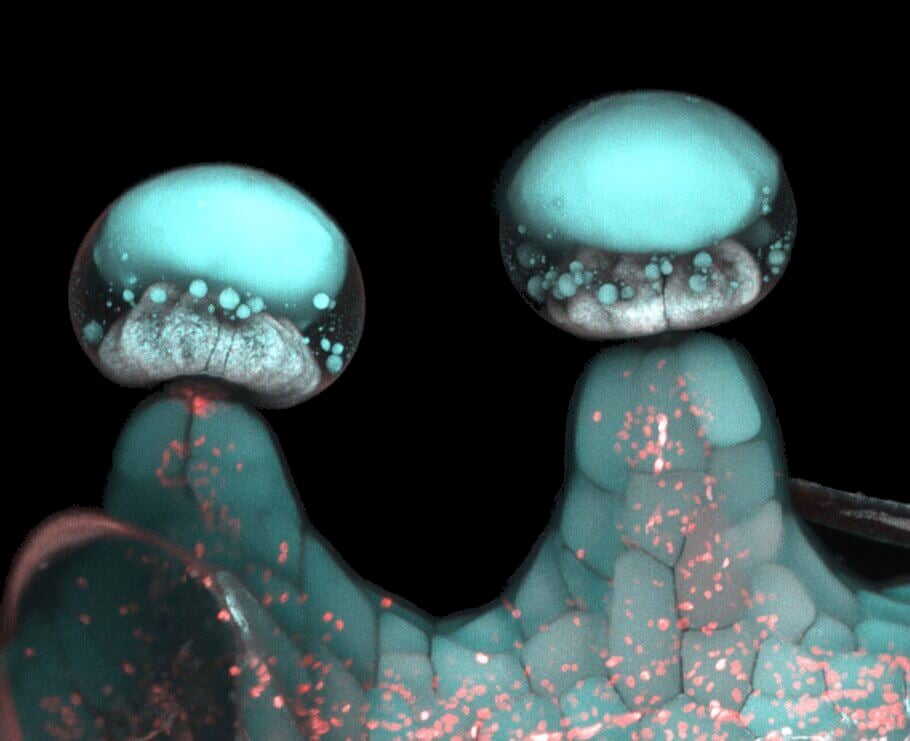

It’s not just matrix math and hidden layers and and transistors firing. It’s about the structural geometry of concepts created by distinxt relationships between areas of the embeddings that the matrix math creates within high dimensional manifold. It’s about how facts and relationships form a literal, topographical landscape inside the network’s activation space.

At its heart, this is about the physics of information. It’s a dynamical system. We’re watching entropy crystallize into order, as the model traces paths through the topological phase space of all possible conversations.

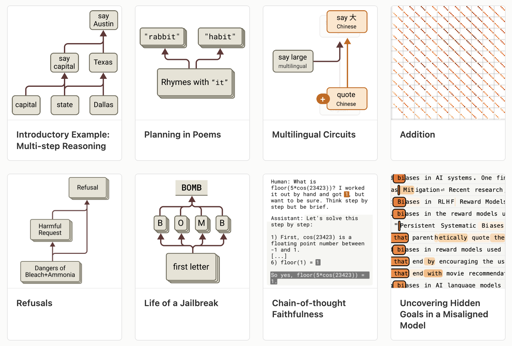

The “reasoning” CoT patterns are about finding patterns that help lead the model towards truthy outcomes more often. It’s searching for the computationally efficient paths of least action that lead to meaningfully novel and factually correct paths. Those are the valuable attractor basins in that vast possibility space were trying to navigate towards.

This is the powerful part. This constellation of ideas. Tying together topology, dynamics, and information theory, this is the real frontier. What used to be philosophy is now a feasable problem for engineers and physicists to chip at, not just philosophers.

I did some theory-crafting and followed the math for fun over the summer, and I believe what I found may be relevant here. Please take this with a grain of salt, though; I am not an academic, just someone who enjoys thinking about these things.

First, let’s consider what models currently do well. They excel at categorizing and organizing vast amounts of information based on relational patterns. While they cannot evaluate their own output, they have access to a massive potential space of coherent outputs spanning far more topics than a human with one or two domains of expertise. Simply steering them toward factually correct or natural-sounding conversation creates a convincing illusion of competency. The interaction between a human and an LLM is a unique interplay. The LLM provides its vast simulated knowledge space, and the human applies logic, life experience, and “vibe checks” to evaluate the input and sift for real answers.

I believe the current limitation of ML neural networks (being that they are stochastic parrots without actual goals, unable to produce meaningfully novel output) is largely an architectural and infrastructural problem born from practical constraints, not a theoretical one. This is an engineering task we could theoretically solve in a few years with the right people and focus.

The core issue boils down to the substrate. All neural networks since the 1950s have been kneecapped by their deployment on classical Turing machine-based hardware. This imposes severe precision limits on their internal activation atlases and forces a static mapping of pre-assembled archetypal patterns loaded into memory.

This problem is compounded by current neural networks’ inability to perform iterative self-modeling and topological surgery on the boundaries of their own activation atlas. Every new revision requires a massive, compute-intensive training cycle to manually update this static internal mapping.

For models to evolve into something closer to true sentience, they need dynamically and continuously evolving, non-static, multimodal activation atlases. This would likely require running on quantum hardware, leveraging the universe’s own natural processes and information-theoretic limits.

These activation atlases must be built on a fundamentally different substrate and trained to create the topological constraints necessary for self-modeling. This self-modeling is likely the key to internal evaluation and to navigating semantic phase space in a non-algorithmic way. It would allow access to and the creation of genuinely new, meaningful patterns of information never seen in the training data, which is the essence of true creativity.

Then comes the problem of language. This is already getting long enough for a reply comment so I won’t get into it but theres some implications that not all languages are created equal each has different properties which affect the space of possible conversation and outcome. The effectiveness of training models on multiple languages finds its justification here. However ones which stomp out ambiguity like godel numbers and programming languages have special properties that may affect the atlases geometry in fundamental ways if trained solely on them

As for applications, imagine what Google is doing with pharmaceutical molecular pattern AI, but applied to open-ended STEM problems. We could create mathematician and physicist LLMs to search through the space of possible theorems and evaluate which are computationally solvable. A super-powerful model of this nature might be able to crack problems like P versus NP in a day or clarify theoretical physics concepts that have elluded us as open ended problems for centuries.

What I’m describing encroaches on something like a psudo-oracle. However there are physical limits that this can’t escape. There will always be energy and time resource cost to compute which creates practical barriers. There will always be definitively uncomputable problems and ambiguity that exit in true godelian incompleteness or algorithmic undecidability. We can use these as scientific instrumentation tools to map and model topological boundary limits of knowability.

I’m willing to bet theres man valid and powerful patterns of thought we are not aware of due to our perspective biases which might be hindering our progress.